Six rounds in to the 2019 season seems like a weird time to evaluation the performance of my model in 2018, but that’s why you come to The Arc for: slightly out-of-date graphs about AFL models.

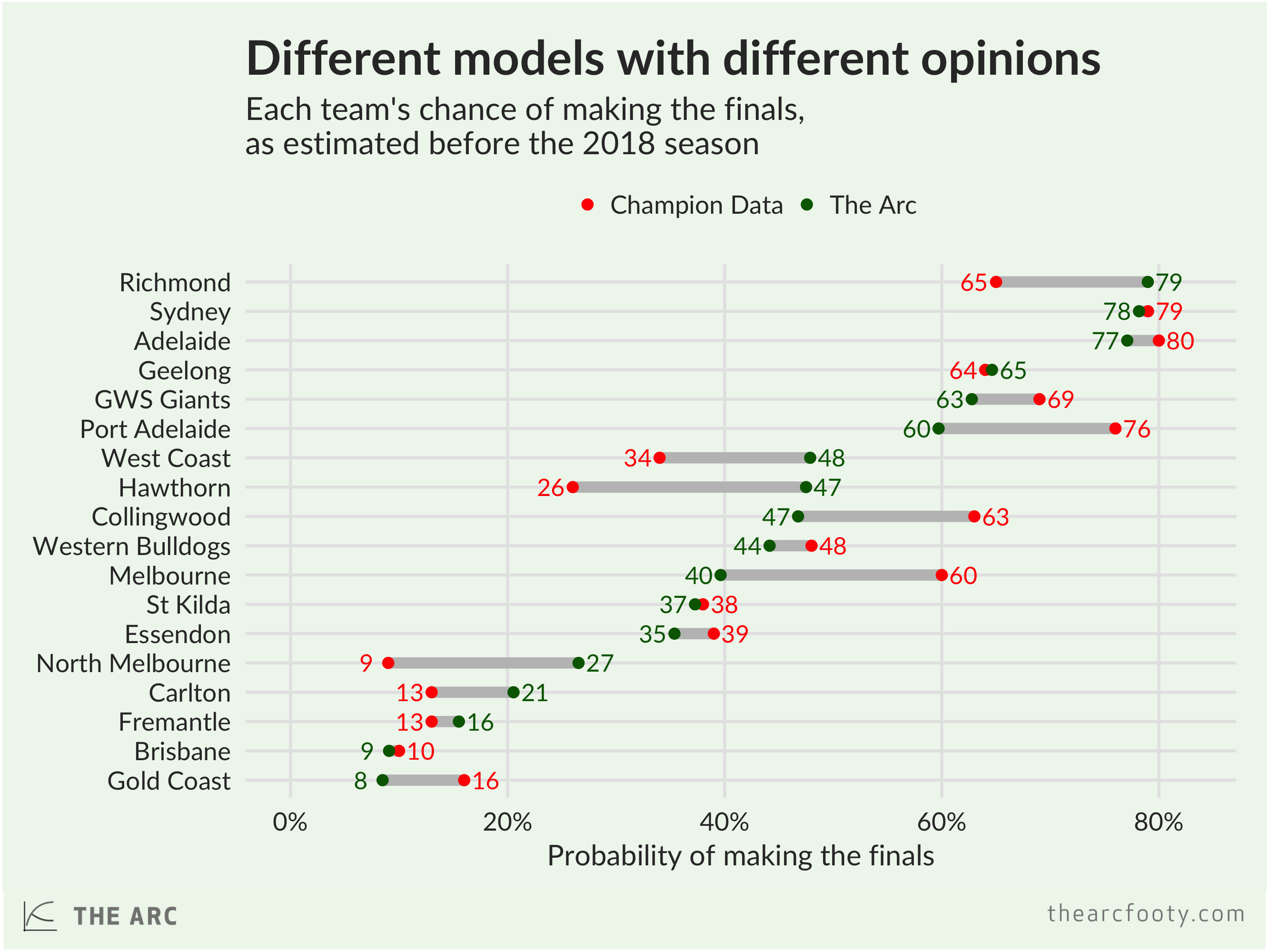

Most modellers, perhaps sensibly, give it a few rounds before they start projecting who will make the finals. But I, and Champion Data, estimated how likely each team was to make the finals before a single game had been played in the 2018 season. Our estimates are pretty similar overall. I was more optimistic about the prospects for Richmond, West Coast, Hawthorn, and North Melbourne; CD was more enthusiastic about Port, Collingwood and Melbourne. A mixed bag.

Overall, we can assess the quality of each modeller’s estimates using a Brier score. The Brier score ranges from zero to one, with lower scores being better. My pre-season 2018 predictions had a Brier score of 0.173, and Champion Data’s scored 0.194, so my simulations came out just on top.

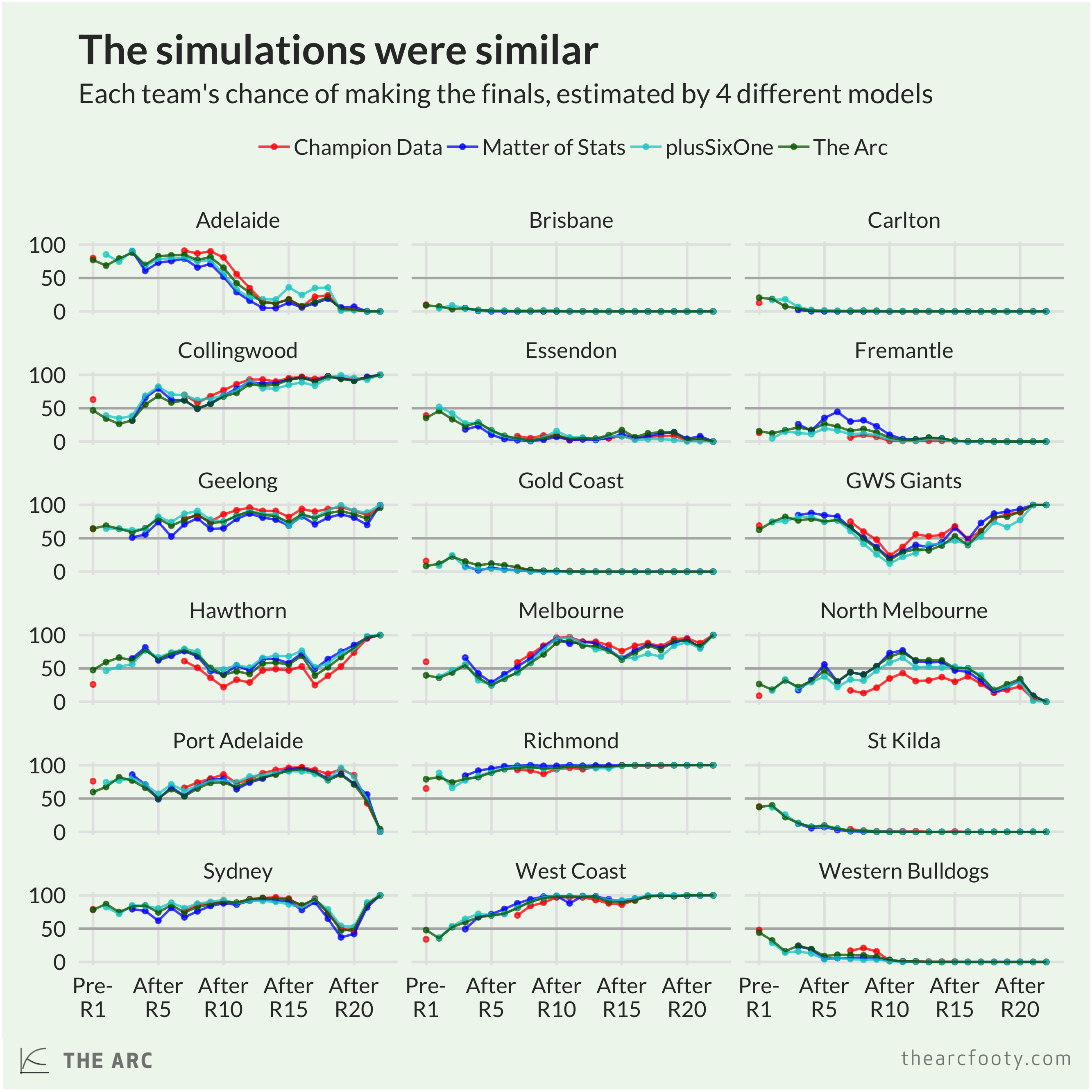

If we move beyond pre-season simulations, we have some other modellers to evaluate. James at plusSixOne kicked off his simulations after Round 1, and Tony at Matter of Stats started after Round 3. After their pre-season predictions, Champion Data started publishing predictions again after Round 7. Overall, the models’ estimates of each team’s chance of making the finals were pretty similar, as you can see.

Using each model’s predictions, we can calculate a Brier score for each model after each round. As you’d expect, the accuracy of predictions improved as the season went on. But, just like in 2017, there’s no clear threshold beyond which the simulations suddenly become reliable.

In 2017, my predictions had a slightly lower (better) Brier score than Champion Data or Matter of Stats. This year, the tables have turned. If we look at rounds in which every model made a prediction, Tony at Matter of Stats had the best Brier score at 0.0853, followed by Champion Data at 0.0866, then James of plusSixOne at 0.0870, and mine at 0.0891. In all: pretty similar, but mine performed a bit worse through the middle of the season, around rounds 11 to 13, which cost me.

All up: I’m happy that simulations based on my model are performing more or less as well as other models. I’d love to revamp my model – and I have some ideas about how I’ll do it – but even without a revamp I think the 2018 results are quite respectable.